Running LLMs Locally with Ollama

Is there any value in running small LLMs (≤16GB) locally when all the big tech companies offer free credits for truly large LLMs? Will my poor graphics card explode under my desk?

- Getting Ollama Running

- Finding Our First Model

- Text Prompts

- Image Prompts

- Ensuring We’re On the GPU

- Tokens Per Second

- In the Future

Getting Ollama Running

I have the following system specs, displayed courtesy of screenfetch.

The key takeaway is that I have 16 GB of VRAM available in my GPU, so I’m limited to models that are ≤16GB.

I simply Google’d “run llms locally” and chose to follow this useful guide from Jeremy Morgan. Here are the two commands I needed to get up and running. Beware of piping commands into sh.

alexshank@alex-desktop:~/Desktop/main/repos/blog-astro$ curl -fsSL https://ollama.com/install.sh | sh

>>> Installing ollama to /usr/local

[sudo] password for alexshank:

>>> Downloading Linux amd64 bundle

######################################################################## 100.0%

>>> Creating ollama user...

>>> Adding ollama user to render group...

>>> Adding ollama user to video group...

>>> Adding current user to ollama group...

>>> Creating ollama systemd service...

>>> Enabling and starting ollama service...

Created symlink /etc/systemd/system/default.target.wants/ollama.service → /etc/systemd/system/ollama.service.

>>> NVIDIA GPU installed.

alexshank@alex-desktop:~/Desktop/main/repos/blog-astro$ ollama run mistral-small3.1

pulling manifest

pulling 1fa8532d986d... 100% ▕████████████████████████████████▏ 15 GB

pulling 6db27cd4e277... 100% ▕████████████████████████████████▏ 695 B

pulling 70a4dab5e1d1... 100% ▕████████████████████████████████▏ 1.5 KB

pulling a00920c28dfd... 100% ▕████████████████████████████████▏ 17 B

pulling 9b6ac0d4e97e... 100% ▕████████████████████████████████▏ 494 B

verifying sha256 digest

writing manifest

success

>>> hello

Hello! How can I assist you today?

> > > What's the capital of italy?

> > > The capital of Italy is Rome.

Finding Our First Model

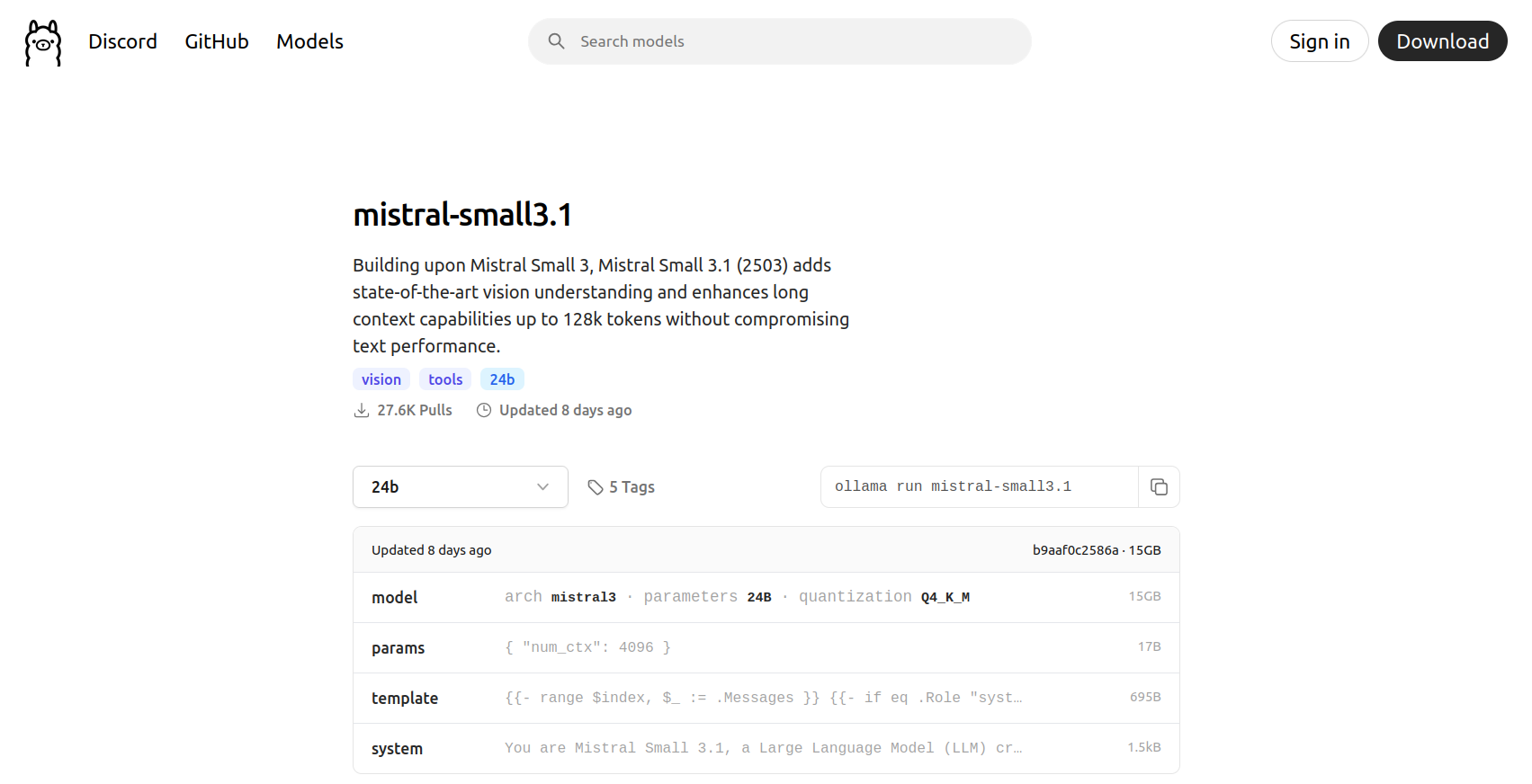

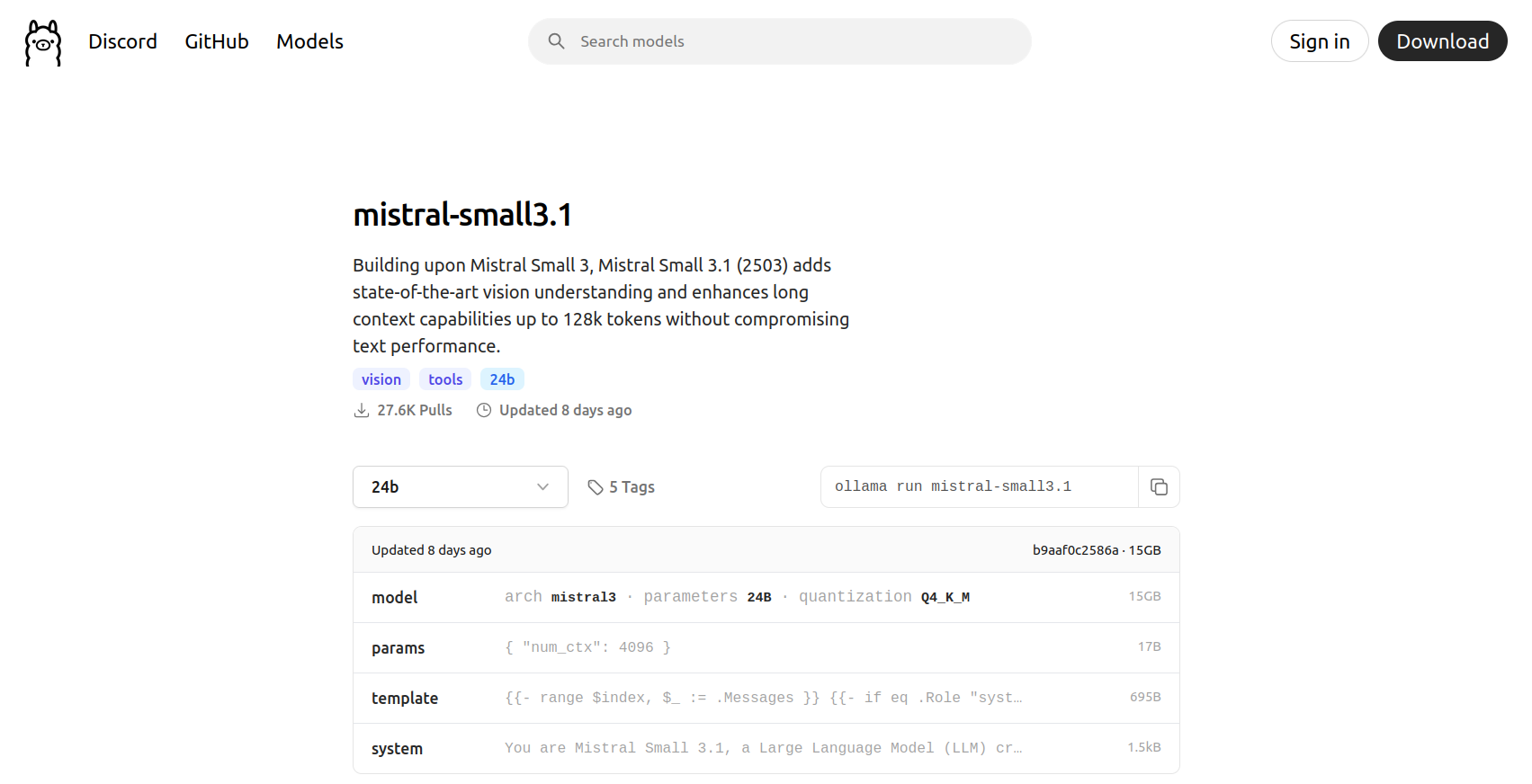

We’re just playing around, so I looked at the most popular models on the Ollama site and chose one that was as close to 16GB as possible.

It turns out, the sweet spot for model size ends up being about half my available GPU VRAM. This is because inference on large context eats up the remaining VRAM.

mistral-small3.1 looks nice to start out with. It even has some vision capabilities.

Text Prompts

Here are some selected prompts and responses. They help us get a feel of the performance. Longer responses took tens of seconds to produce.

First local prompt ever (!!):

>>> hello

Hello! How can I assist you today?Standard opener — nothing surprising or particularly clever here.

Basic factual Q&A:

>>> What's the capital of italy?

The capital of Italy is Rome.Correct, direct, and unremarkable — a classic factual query.

Beginner-friendly code explanation:

>>> how do you make a for loop in python?

In Python, a `for` loop is used to iterate over a sequence (such as a list, tuple,

dictionary, set, or string). Here is the basic syntax for a `for` loop:

```python

for item in sequence:

# Code block to be executed for each item in the sequence

```

Here are a few examples to illustrate how to use a `for` loop in different contexts:

### Iterating over a list:

```python

fruits = ["apple", "banana", "cherry"]

for fruit in fruits:

print(fruit)

```

### Iterating over a range of numbers:

```python

for i in range(5):

print(i)

```

### Iterating over a string:

```python

for char in "hello":

print(char)

```

### Iterating over a dictionary:

```python

person = {"name": "Alice", "age": 30, "city": "New York"}

for key, value in person.items():

print(f"{key}: {value}")

```

### Using `enumerate` to get both index and value:

```python

fruits = ["apple", "banana", "cherry"]

for index, fruit in enumerate(fruits):

print(f"Index {index}: {fruit}")

```

### Using `zip` to iterate over multiple sequences:

```python

list1 = [1, 2, 3]

list2 = ['a', 'b', 'c']

for item1, item2 in zip(list1, list2):

print(item1, item2)

```

A very complete answer — maybe too complete. It includes lots of variations (looping over strings, dicts, using enumerate, zip, etc.).

A question of personal opinion:

>>> What's your favorite vim trick?

I don't have personal favorites or preferences, but I can certainly share a popular and

useful Vim trick that many users find helpful!

One of the most powerful features of Vim is the ability to perform complex text

manipulations using macros. Macros allow you to record a series of keystrokes and then

play them back to automate repetitive tasks.

Here's how you can record and use a macro in Vim:

1. **Start recording a macro**:

- Press `q` followed by a register name (e.g., `a`, `b`, `c`, etc.). For example, to

start recording into register `a`, press `qa`.

2. **Perform the actions you want to record**:

- Perform the series of keystrokes or commands you want to automate.

3. **Stop recording**:

- Press `q` again to stop recording.

4. **Play back the macro**:

- To play back the macro, press `@` followed by the register name. For example, to play

back the macro recorded in register `a`, press `@a`.

- To play back the macro multiple times, you can use a count prefix. For example, to

play back the macro recorded in register `a` ten times, press `10@a`.

### Example

Suppose you want to automate the process of commenting out a series of lines. You can

record a macro to do this:

1. Start recording into register `a` by pressing `qa`.

2. Move to the beginning of the line you want to comment out by pressing `I`.

3. Insert the comment character (e.g., `#` for shell scripts or `//` for C-like languages)

and press `Esc`.

4. Move to the next line by pressing `j`.

5. Stop recording by pressing `q`.

Now, you can play back the macro to comment out multiple lines by pressing `@a` for each

line or `10@a` to comment out ten lines.

This is just one of many powerful features in Vim that can greatly enhance your

productivity once you get the hang of it!A very solid, practical tip — not something everyone knows, and a great example of the model offering genuinely helpful tooling knowledge.

Out-of-date training data:

>>> What is the MCP protocol for LLMs?

As of my last update in October 2023, there isn't a widely recognized or standardized protocol specifically named "MCP" for Large

Language Models (LLMs). It's possible that "MCP" could refer to a specific internal protocol, framework, or concept within a

particular organization or research context that hasn't gained widespread recognition or documentation.

If you're referring to a specific protocol, framework, or concept related to LLMs, could you provide more context or clarify what you

mean by "MCP protocol"? This will help me give you a more accurate and helpful response.

Alternatively, if you're interested in general protocols or frameworks related to LLMs, here are a few notable ones:

1. **API Protocols**: Many LLMs are accessed via APIs (Application Programming Interfaces) provided by companies like OpenAI, Google,

and others. These APIs typically use RESTful protocols over HTTP/HTTPS.

2. **Model Serving Frameworks**: Frameworks like TensorFlow Serving, TorchServe, and ONNX Runtime are used to deploy and serve

machine learning models, including LLMs, in production environments.

3. **Communication Protocols**: For distributed training and inference of LLMs, protocols like gRPC (Google Remote Procedure Call)

are often used to facilitate communication between different components of a system.

4. **Data Formats**: Common data formats for interacting with LLMs include JSON (JavaScript Object Notation) for API requests and

responses, and protocols like Protocol Buffers (protobuf) for efficient serialization of structured data.

If you provide more details or clarify your question, I can offer more targeted information!Of course, local models can also be trained on stale data that doesn’t know about the latest and greatest topics and information. The model does give some helpful guesses as to what MCP could be, though.

Image Prompts

TODO.

Ensuring We’re On the GPU

TODO.

Tokens Per Second

TODO.

In the Future

We could consider using local LLMs to explore…

- Subjective or empirical scoring against other LLMs.

- Communication via the Model Context Protocol.

- Integrating the local model into VS Code Copilot.

- Creating a local application that leverages the Ollama server API.

- Limits of my machine and graphics card.